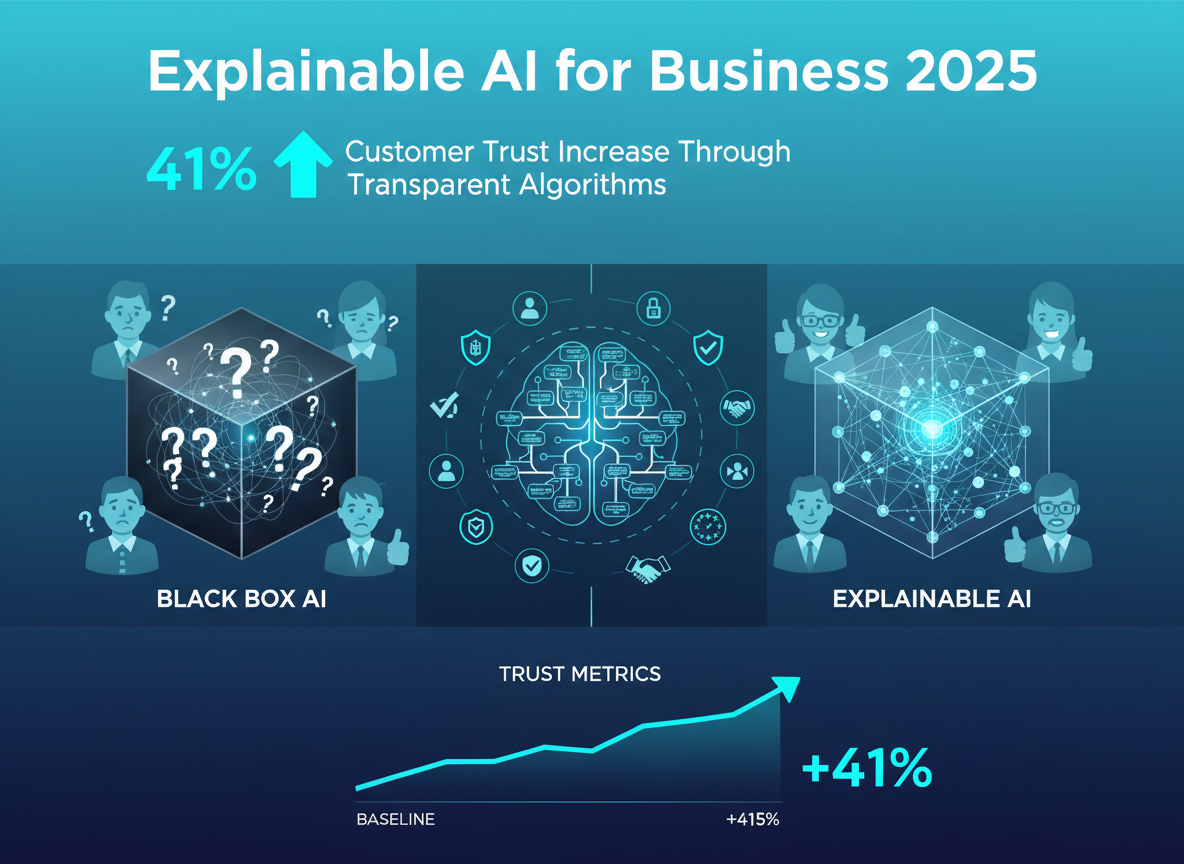

Explainable AI for business 2025 transforms from optional feature to strategic imperative. Bismart research documents that Bank of America increased customer acceptance by 41% through AI recommendation transparency. The explainable AI market grows from $7.94 billion in 2024 to $30.26 billion by 2032 representing systematic adoption. Organizations without XAI frameworks face regulatory penalties up to 6% of global revenue.

Our secure AI services implement explainable AI for business 2025 across enterprise operations. The platform provides decision transparency through SHAP values, LIME explanations, and attention visualization. Stakeholders understand AI reasoning without requiring data science expertise.

McKinsey’s State of AI report reveals companies with mature XAI practices achieve 25% higher AI-driven revenue growth. AIMultiple enterprise research shows that 77% of consumers trust companies providing transparent AI explanations more. Explainable AI for business 2025 bridges the trust gap between algorithmic decisions and human stakeholders.

Why Explainable AI for Business 2025 Matters

Traditional black-box AI models produce accurate predictions without explaining reasoning. Stakeholders cannot validate model logic creating trust barriers to adoption. Regulatory compliance requires algorithmic transparency in financial services and healthcare. Business leaders need justifiable decisions not just optimized outcomes.

Explainable AI for business 2025 reveals feature importance and decision pathways systematically. Model debugging improves through understanding which inputs drive predictions. Bias detection becomes possible when decision logic is transparent. Organizations build AI systems stakeholders actually trust and use.

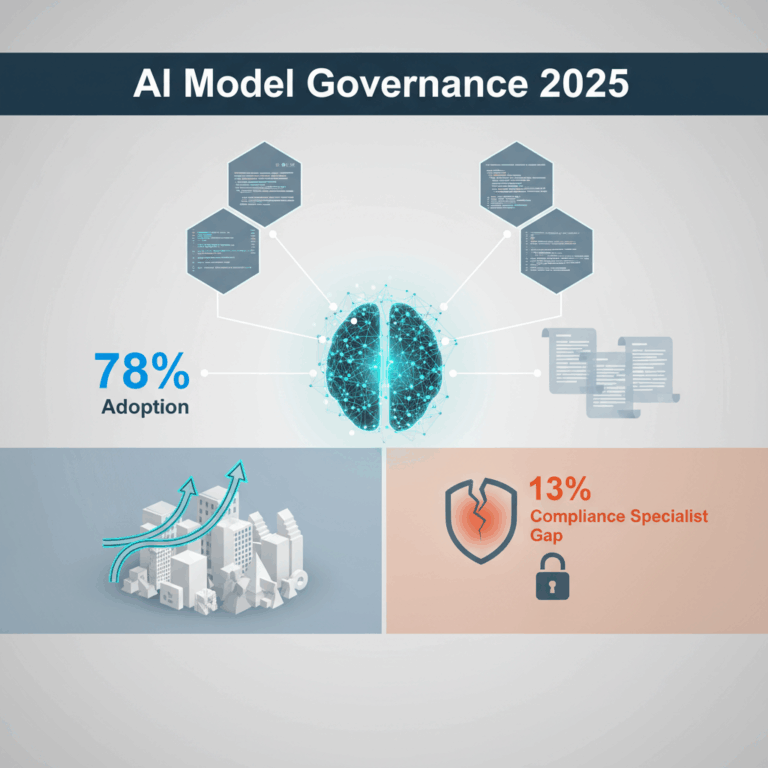

Twenty-seven countries now mandate algorithmic transparency requirements. EU AI Act requires high-risk systems provide explanations to affected individuals. Financial institutions must justify credit decisions and loan denials. Our platform ensures regulatory compliance through built-in explainability frameworks.

4 Core Explainable AI for Business 2025 Techniques

1. SHAP (SHapley Additive exPlanations)

SHAP values quantify each feature’s contribution to individual predictions using game theory. The method provides consistent and locally accurate explanations across model types. Stakeholders see exactly which factors influenced specific decisions. Explainable AI for business 2025 leverages SHAP for model-agnostic transparency.

Implementation visualizes feature importance through waterfall plots and force diagrams. Positive SHAP values increase prediction confidence while negative values decrease it. Global explanations aggregate individual SHAP values showing overall model behavior. Business users interpret results without understanding underlying mathematics.

2. LIME (Local Interpretable Model-agnostic Explanations)

LIME explains individual predictions by approximating complex models locally with simpler ones. The technique works with any black-box classifier or regressor. Perturbation analysis tests how input changes affect predictions systematically. Explainable AI for business 2025 uses LIME when SHAP computation proves impractical.

Local fidelity ensures explanations accurately reflect model behavior for specific instances. Business stakeholders receive human-understandable rules explaining individual decisions. Tabular, text, and image data all support LIME explanation generation. Our pricing includes LIME implementation for all AI projects.

3. Attention Mechanisms for Deep Learning

Attention weights reveal which input elements neural networks focus on during predictions. The approach works naturally with transformer architectures processing sequences. Visualization highlights relevant words in text classification or image regions in computer vision. Explainable AI for business 2025 extends beyond traditional machine learning to deep networks.

Multi-head attention provides multiple explanation perspectives simultaneously. Stakeholders understand not just what model predicted but which information drove conclusions. Natural language processing benefits particularly from attention-based explainability. Interpretability integrates into model architecture rather than retrofitting afterward.

4. Counterfactual Explanations

Counterfactuals show minimum input changes needed to alter predictions. Credit denial explanations specify exactly what would change decision to approval. The approach provides actionable guidance not just descriptive explanations. Explainable AI for business 2025 empowers stakeholders to influence outcomes.

Plausibility constraints ensure counterfactuals represent realistic scenarios. Multiple diverse counterfactuals prevent cherry-picking favorable explanations. Regulatory compliance improves as affected individuals understand decision rationale. Contact us for counterfactual explanation implementation.

Business Impact Measurement

Customer acceptance rates increase 41% when AI recommendations include transparent explanations. Portfolio adjustments improve 28% as stakeholders trust algorithmic advice. McKinsey data shows 10-20% model performance improvement through XAI-enabled debugging. Explainable AI for business 2025 delivers measurable operational improvements.

Error reduction reaches 15-30% as teams identify and correct model biases systematically. Regulatory compliance costs decrease through built-in transparency requirements. Customer service teams explain AI decisions confidently without escalation. Our mission focuses on trustworthy AI deployment through explainability.

Organizations achieve faster AI adoption as stakeholder resistance decreases. Business users validate model logic before trusting production deployment. Audit trails document decision-making processes satisfying compliance requirements. Explainable AI for business 2025 removes trust barriers blocking AI value realization.

Conclusion

Explainable AI for business 2025 evolved from research concept to business requirement. The 41% trust increase and 25% revenue growth are empirically validated. Organizations without XAI frameworks face regulatory penalties and stakeholder resistance. Transparent algorithmic decision-making becomes competitive necessity not optional enhancement.

Implement explainable AI solutions today. Varna AI delivers proven XAI frameworks for global enterprises. Our platform combines algorithmic transparency with operational simplicity. The future of artificial intelligence is explainable, trustworthy, and human-centered.